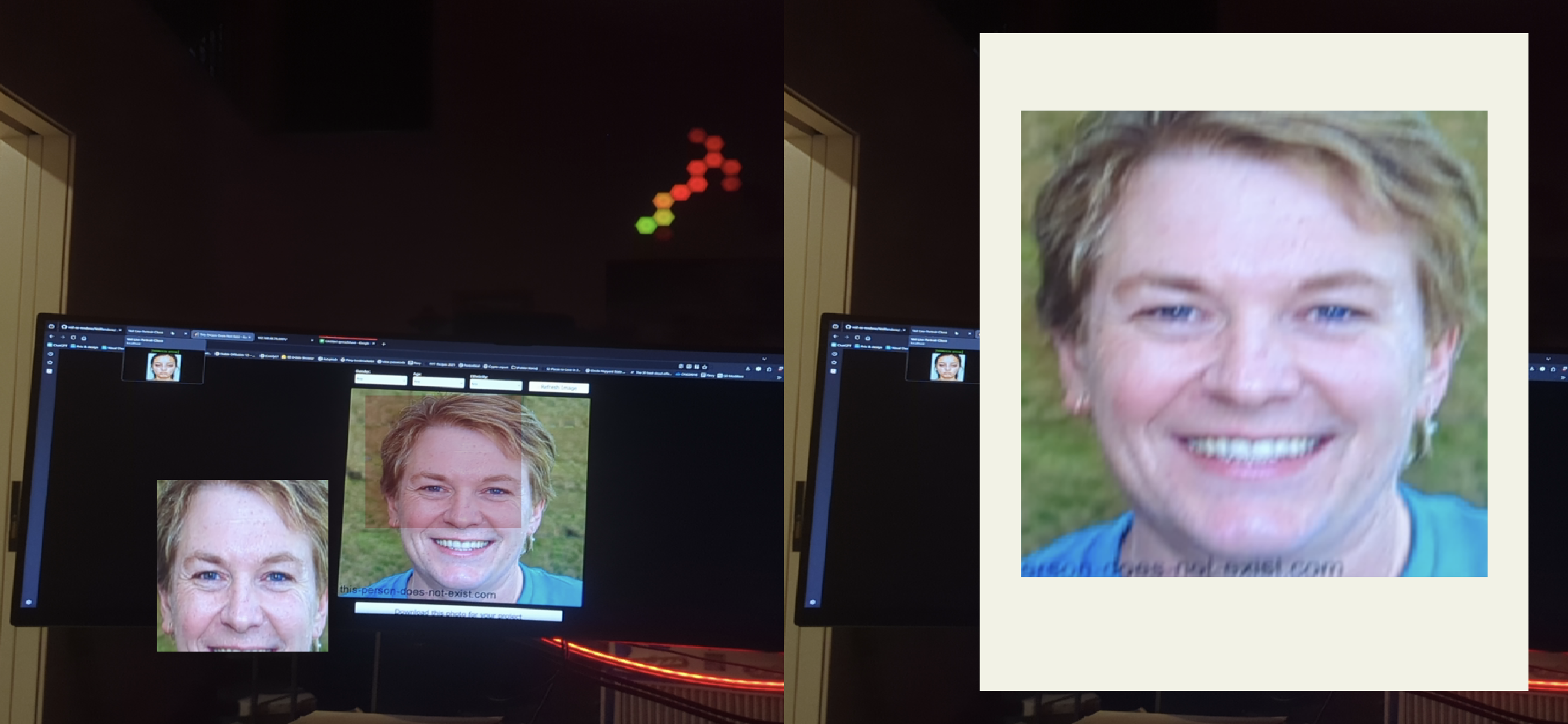

Problem: ML Kit face capture works! However, displaying a static photo of a face on the external screen would be boring. Identity and agency are central to the message of Veil, so doing something more to the face than simply displaying it is appealing.

Approach: Use LivePortrait to bring the face to life on the screen. This should add to the horror of seeing one’s own visage unexepectedly AND be a concrete example of how we unwillingly surrender ownership of our identity to digital surfaces.

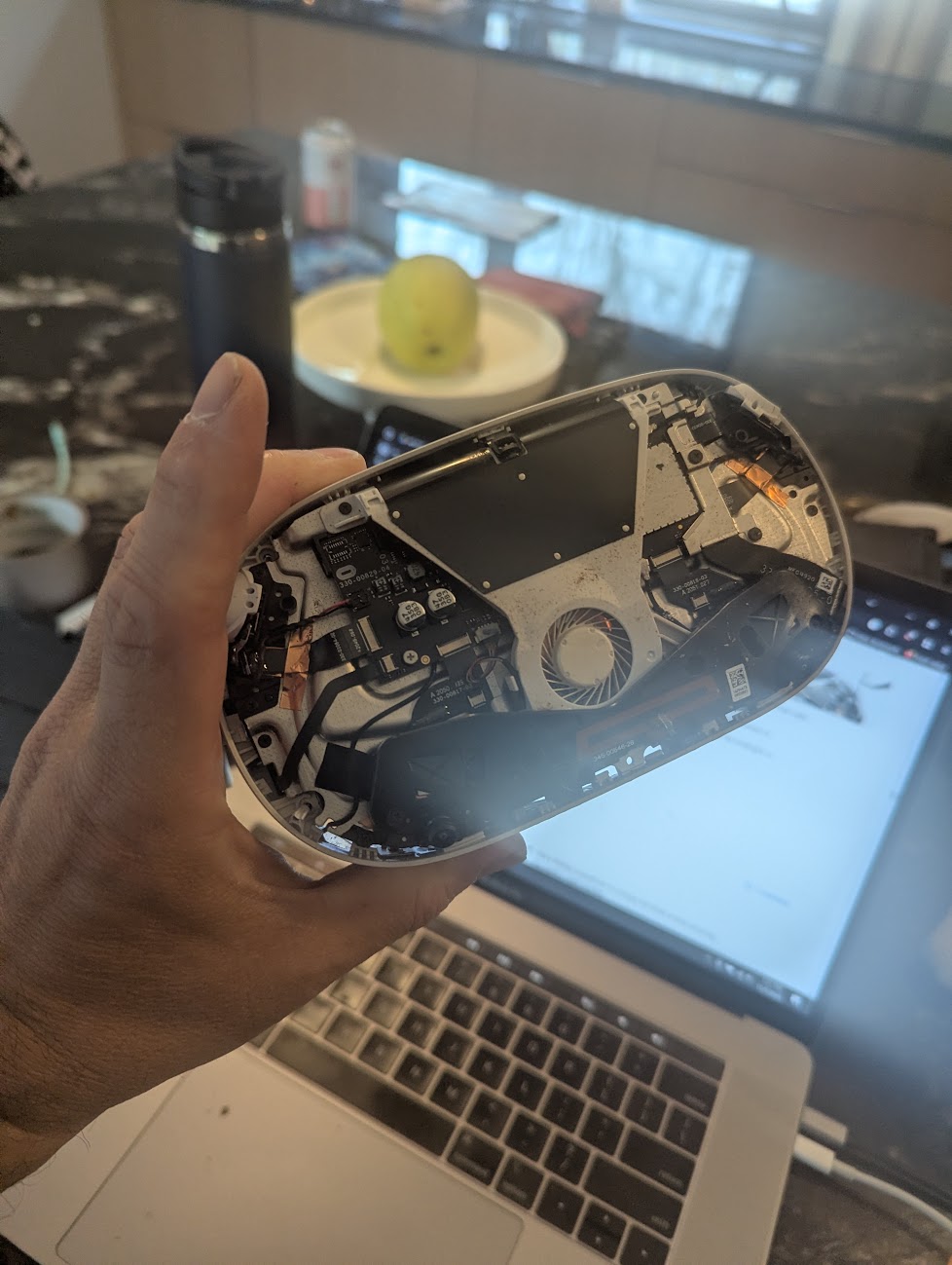

Challenge: While I do have a Raspberry Pi 5 w/ AI hat that I plan on using for onboard compute, it may still be very slow to animate the face. I’ve prototyped with using my desktop GPU via Tailscale and it was seriously slow. In order for this to be effective, it needs to be near realtime.